Seems like so far, as far as anybody knows, only the Zotac “Solid” cards are affected.

First paragraph reads:

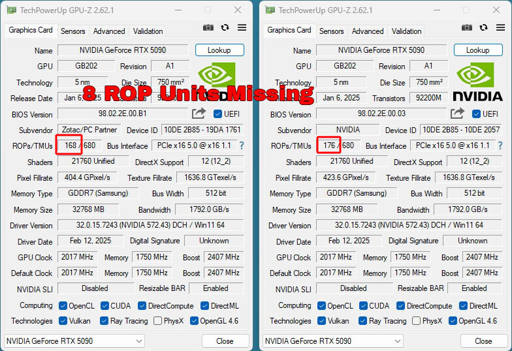

TechPowerUp has discovered that there are NVIDIA GeForce RTX 5090 graphics cards in retail circulation that come with too few render units, which lowers performance. Zotac’s GeForce RTX 5090 Solid comes with fewer ROPs than it should—168 are enabled, instead of the 176 that are part of the RTX 5090 specifications. This loss of 8 ROPs has a small, but noticeable impact on performance. During recent testing, we noticed our Zotac RTX 5090 Solid sample underperformed slightly, falling behind even the NVIDIA RTX 5090 Founders Edition card. At the time we didn’t pay attention to the ROP count that TechPowerUp GPU-Z was reporting, and instead spent time looking for other reasons, like clocks, power, cooling, etc.

ROP?

Basically, the ROPs are in charge of the final stage of rendering a frame.

They are discrete, physical components of the GPU.

GPUs at this point are quite complex boards of many specialized kinds of processors passing information to and from each other.

Tensor cores, RT cores, CUDA cores, ROPs, etc… these are all specialized processors, specializing in different kinds of computations.

ROPs, Raster Output Processors, do varying kinds of postprocessing on the almost final stage of a rendered frame, assemble the data from other parts of the GPU and then push the finalized, rastered pixels to the frame buffer.

A rough analogy would that ROPs are in charge of the final editing pass on a paper or article before it’s published, with the analagous ‘research’, ‘fact verifying’, and ‘rough draft’ having already been done by other parts of the GPU first.

Maybe another analogy would be that ROPs are the ‘final assembly’ of a frame, if constructing a frame was like building a car or aircraft.

A simpler, more literal explanation is that the ROPs perform the final stage of rendering a frame before the GPU actually pushes it out for you to see.

So… if the GPU is missing 8 ROPs… the GPU is basically bottlenecking itself, internally.

Wow thank you for the explanation! That made it very clear.

Thank you for the explanation!

The article is terribly written, you need to scroll way down in the article to find out what ROP means, despite the article using the acronym several times

Ok, it’s Raster Operations Pipeline for anyone who doesn’t want to read that far.

Edit: In this context it is probably Raster Output Processor, as sp3ctr4l points out.

Technically, the Raster Operations Pipeline is the entire process of actually rendering a frame, or, contextually and depending on what precise terminology is being used by what company for which architecture, it may only refer to the final stages of actually rendering the frame.

Raster Operations Pipeline is the process, the systematized flow of different stages of rendering, the verb or action that the physical Raster Output Processors actually perform. EDIT: Or perform a part of, a stage of.

In this case, the Raster Operations Pipeline is hampered by the GPU missing 8 out of 176 Raster Output Processors.

Ie, its missing an amount of discrete physical components from the GPU board, and thus is less performant at actually rendering the Raster Operations Pipeline.

Nvidia is … pretty much very obviously at this point going out of its way to make the terminogy around its GPUs as confusing as possible, so that they can more easily do the equivalent of Star Trek esque technobabble to waive away and dismiss anyone who tries to actually dive in and understand what they’re actually doing.

Just imagine spending 4k€ on a graphics card only to find it’s partially defect.

Even better, imagine spending that with a scalper who isn’t going to honour a warranty.

only one idiot to blame in that case

For those not clicking through and reading all the way to the bottom:

More cards are apparently affected, they say Nvidia acknowledged the issue, is telling them that users can get a replacement from the manufacturer and that they’ve addressed the root cause for future units.

The root cause was NVIDIA trying to peddle defective dies because they decided to slot up every SKU to dig more money out of their regarded fans. That cut-down 5090 would likely be sold as a 5080. The current xx70xx skus are 5060s in reality and so on and so forth. In all honesty, you get what you deserve. As soon as the hobby became popular, sense and knowledgeable user went out the window.

Man, I am as frustrated with Nvidia as anybody, but that type of vaguely-informed ranting really makes me go on the defensive.

For one thing, the dumb gatekeeping at the end is absurd. I’ve been building PCs since the early 90s, there are more informed users now than there have ever been, by far. And those that aren’t regurgitate whatever they hear on Youtube from tech influencers anyway. Fortnite casuals aren’t what’s keeping you from owning a 5090, friend.

Speaking of uninformed users regurgitating half-understood Youtube talking points, Nvidia certainly wasn’t going to ship 5090s with a single damaged ROP unit as 5080s, those two cards are built on entirely different dies. It’s very likely that they’ll have some 5080SuperTi thing coming out eventually perhaps built on cut down GB202 instead of the GB203 in the base model, but it’d certainly not be cutting down an ROP and leaving everything else the same. That’s not even the 5090D spec. Plus Nvidia confirms other cards in the 50 series are also affected.

More importantly, neither of us knows how these made it to market. There’s certainly at the very least some lax QA, and I’m sure there was pressure to get as many of the very limited 5090s to retail as possible, but crappy as the 50 series is in many areas I genuinely doubt Nvidia would be so dumb as to deliberately putting chips with this very specific, very consistent fault in the pipes hoping nobody would notice a performance drop and peek at GPU-Z even once. That’s not how this works. I’d love to know what actually happened to cause this, though.

So yes, the 40 and 50 series are named one step too high on the stack. Yes, the pricing increases have been wild, and it’s frustrating that demand is high enough to support it and regulators aren’t stepping in to moderate the MSRP mishandling. Yes, Nvidia mismanaged the 50 series launch in multiple ways, from bad connector design to rushing the 5090 to misleading marketing on frame generation and probably underbaked drivers. That doesn’t mean every issue is the same issue, and it certainly doesn’t mean that a lack of “knowledgeable users” is to blame.

Dawg why are you so slurpy over what is clearly money grubbing.

I guess people jumping to unfounded conspiratorial conclussions while accusing the plebs of being the cause of all ills doesn’t sit well with me these days.

I wonder why that’d be.

Your last paragraph literally counters everything you said but the last sentence.

So yes, the 40 and 50 series are named one step too high on the stack. Yes, the pricing increases have been wild, and it’s frustrating that demand is high enough to support it and regulators aren’t stepping in to moderate the MSRP mishandling. Yes, Nvidia mismanaged the 50 series launch in multiple ways, from bad connector design to rushing the 5090 to misleading marketing on frame generation and probably underbaked drivers.

That’s it

It really, really doesn’t.

Yes, I think this is the case too, especially with this gen.

Flashback to the 970 debacle

Can you remember a fuckup-free Nvidia launch?

Un-releases, melting connecters (part 1), cropped vram.

Geforces 1-3 We’re ok on launch from what I remember…

10 series there was backlash over them advertising an MSRP and getting reviewers to assess the cards at that value, but having “founders pricing”, where the initial run of cards (that IIRC were Nvidia reference cards only) were far more than the MSRP.

20 series they ramped up prices despite small performance gains, saying that it’s due to ray tracing, and that when new ray tracing games came out the difference would be incredible. Ray traced games didn’t actually come out until long after, and the RT performance was straight up unplayable on any card. But enough time had went past that people couldn’t return the cards by the time that was known.

30 series there was the supply issues, 3090s and 3080s melting in a few games (most prominently in New Dawn), outrageously fake MSRPs (Nvidia was actually selling the GPUs to partners for more than the MSRP!), and really bad levels of VRAM that caused issues (8GB on the 3070/3070 Ti)

I’d be pissed too if my 5090 didn’t come with Really Opulent Poodles too

And really, can one ever have too many rodents opining posthumously?

I figure there’s a reason that Zotac and PNY are always the cheapest cards. No idea why anybody would care about the tiny saving if they’re wasting $2k on a GPU…

Zotac and PNY don’t make the chips, NVIDIA at fault here. Some people care because they don’t actually have a ton of money, but gaming is their hobby and they’ve saved up 2-3 years so they can afford a high end card because it’s worth it to them the extra $50 or $100 represents the difference between being able to afford the card and not, or maybe having a couple of extra games to play on it.

But Nvidia have clearly sold them the chips and told than that some of those cores aren’t working. And they said OK and hoped nobody would really notice.

What NVIDIA have told AIBs is completely unknown, but NVIDIA certainly hold all the power, if NVIDIA say ship them, they ship them.

Note there have been reports of FE cards, MSI, Zotac, Gigabyte and Manli all impacted.