Introduction

Why does Google insist on making it’s assistant situation so bad?

In theory, assistant should be the best it’s ever been. It’s better at “understanding” what I ask for, and yet it less capable than ever to do so.

This post is a rant about my experience using modern assistants on Android, and why, while I used to use these features actively in the mid-to-late-2010s, I now don’t even bother with them.

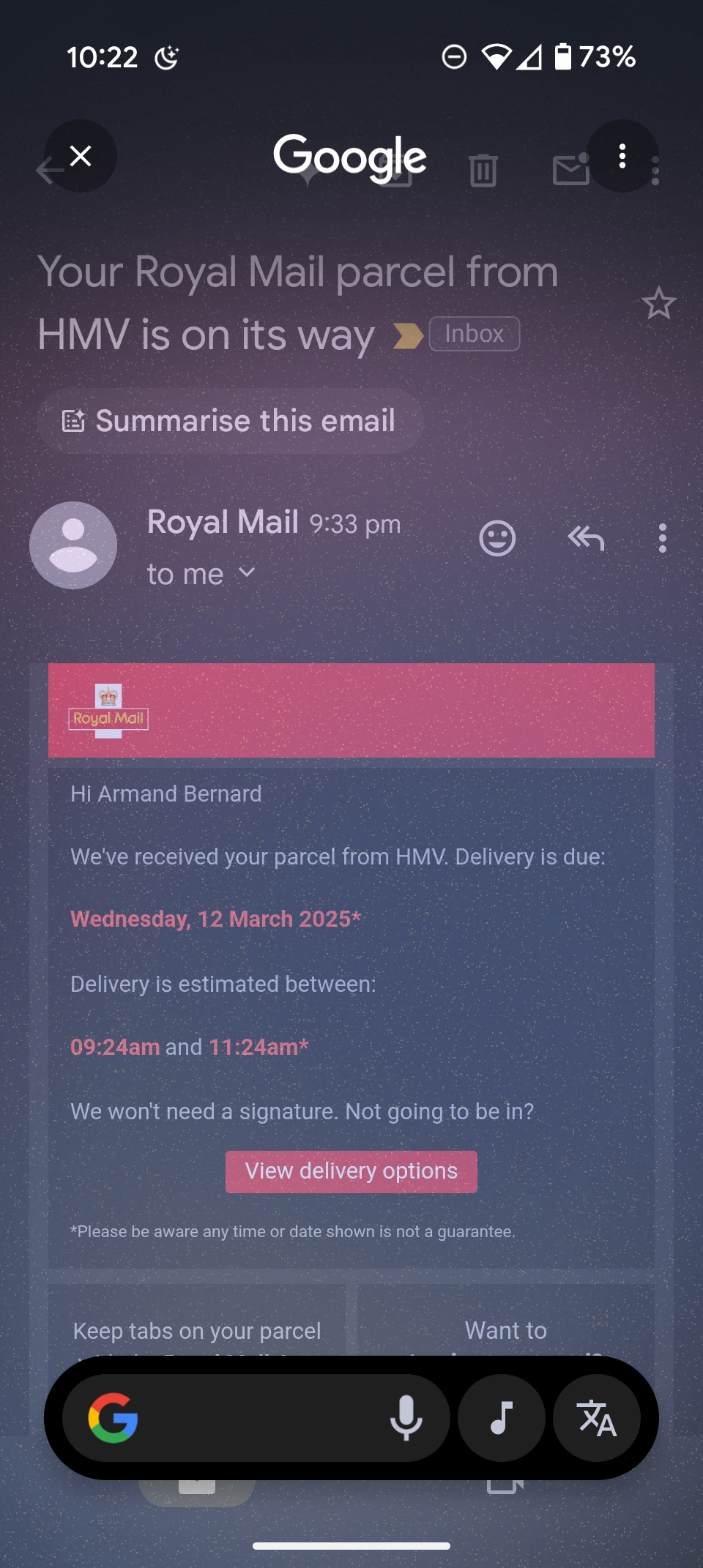

The task

Back in the late 2010s, I used to be able to hold the home button and ask the Google Assistant to create an event based on this email. It would grab the context from my screen, and do exactly that. This has been impossible, as far as I can tell, to do for years now.

Trying to find the “right” assistant

At some point, my phone stopped responding to “OK Google”. I still don’t know why it won’t work.

Holding down the Home bar (the home button went the way of the dodo) brings up an assistant-style UI, but it’s dumb as bricks and only Googles the web. Useless.

So, I installed Gemini. I asked it to perform a basic task. It responded “in live mode, I cannot do that”. Asking it how I can get it to create me a calendar event, it could not answer the question. Saying instead to open my calendar app and create a new event. I know how to use a calendar. I want it to justify its existence by providing more value than a Google search. It was ultimately unable to answer the question.

Searching the internet, apparently both of the ways I had been using assistant features were the wrong way to do it. You have to hold down the power button, that’s how to launch the proper one. My internal response was:

No, that’s for the power menu. I don’t want to dedicate it to Assistant.

Well, apparently, that’s the only way to do it now, so there I go sacrificing another convenience turning it on.

Pulling teeth with Gemini

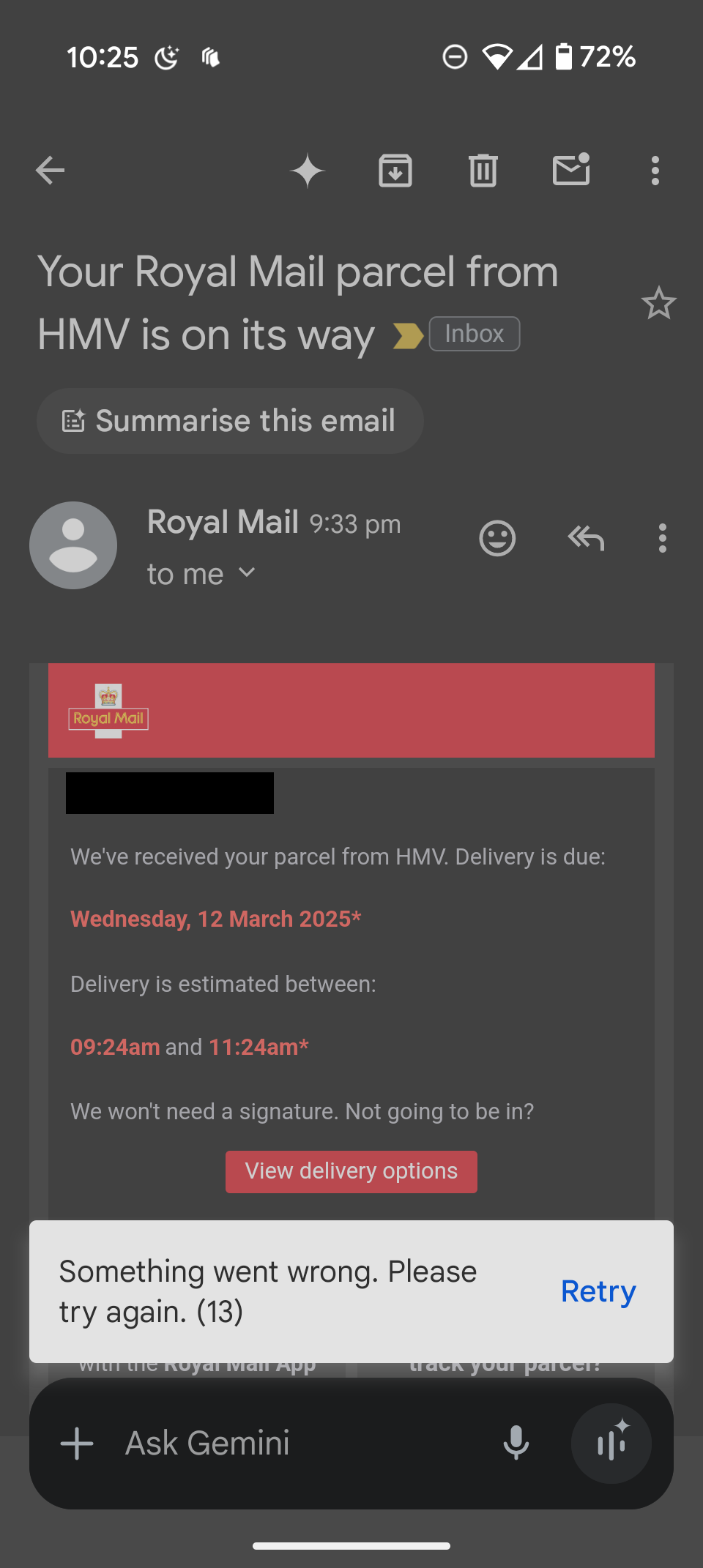

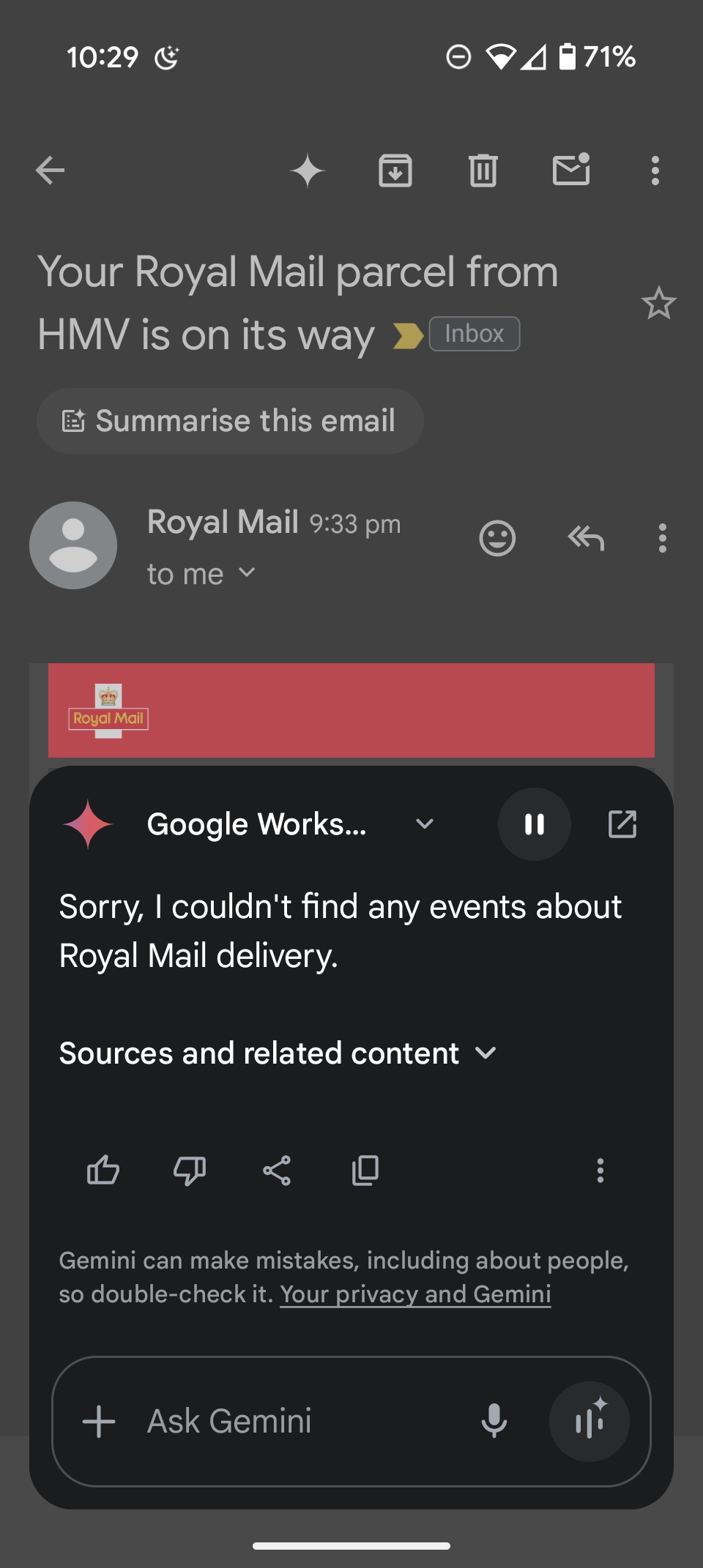

So I ask this power-menu-version of Gemini to do the same simple task. I tried 4 separate times.

First, it created a random event “Meeting with a client” on a completely different day (what?).

Second time it just crashed with an error.

The third time, it asked me which email to use, giving me a list, but that list did not contain the email I was interested in. I asked it to find the Royal Mail one. No success.

So, quite clearly, it wasn’t using screen content.

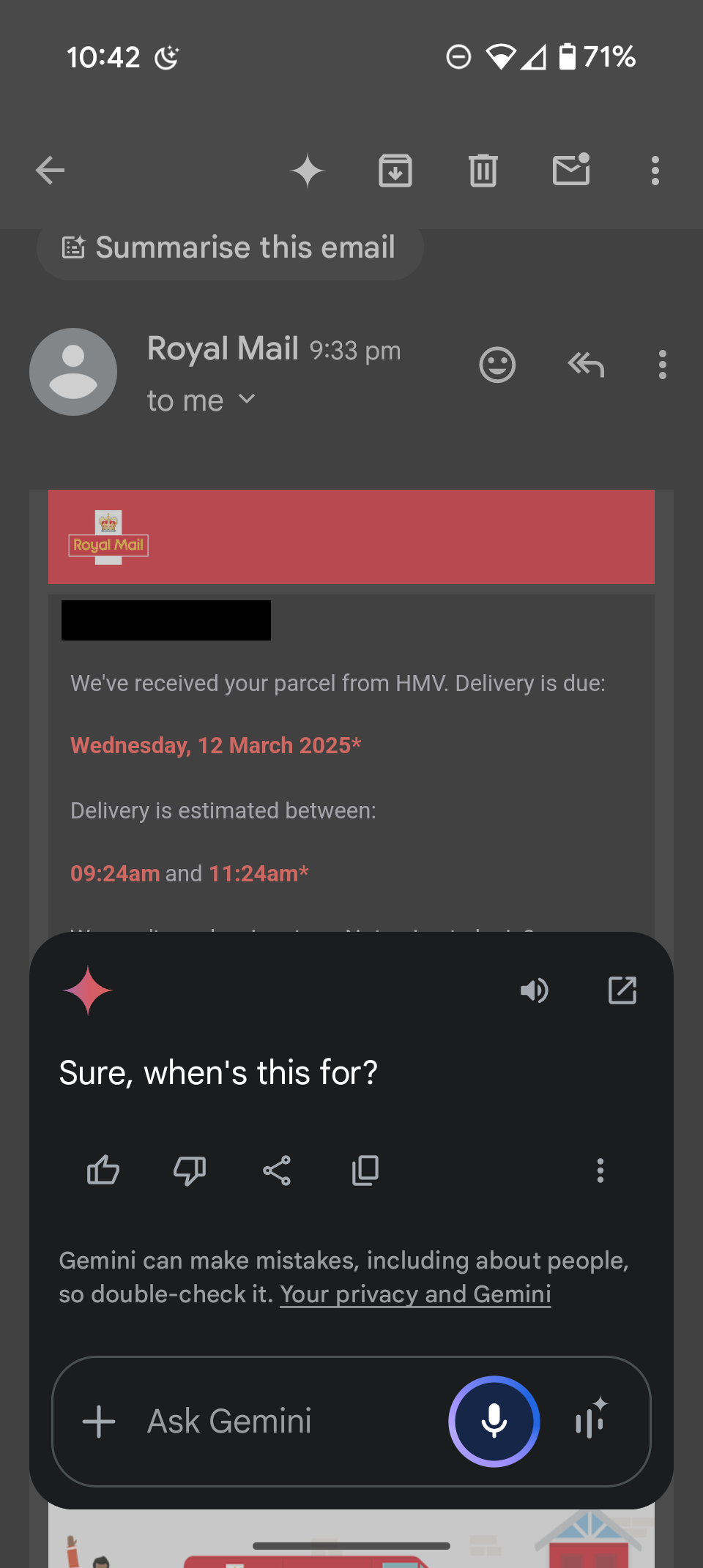

I rephrased the question: “Please create an event from the content on my screen”. It replied “Sure, when’s this for?”

I shouldn’t have to tell you. That’s the point. It’s right there.

Conclusion

There are too many damn assistant versions, and they are all bad. I can’t even imagine what it’s like to also have Bixby in the mix as a Samsung user. (Feel free to let me know below.)

It seems like none of them are able to pull context from what you are doing anymore, and you’ll spend more time fiddling and googling how to make them work than it would take for you to do the task yourself.

In some ways, assistants have gotten worst than almost 10 years ago, despite billions in investments.

As a little bonus, the internet is filled with AI slop that makes finding out real facts, real studies from real people harder than ever.

I write this all mostly to blow off steam, as this stuff has been frustrating me for years now. Let me know what your experience has been like below, I could use some camaraderie.

I’m wondering if there’s a paid and private-ish personal assistent. I’m turning away from all the software where I’m the product. Any ideas?

Have you heard of Ollama? It’s an LLM engine that you can run at home. The speed, model size, context length, etc. that you can achieve really depends on your hardware. I’m using a low-mid graphics card and 32GB of RAM and get decent performance. Not lightning quick like ChatGPT but fine for simple tasks.

Ollama

I’ve been doing a 90 day test of perplexity pro and so far it’s my front runner. They recently released an assistant that can launch in place of google assistants, it’s able to use screen context and interact with some apps, you can limit what info they collect, they don’t sell your data, and it connects to multiple models.

I like the fact that it looks like you can turn off how it saves my data. But I’m wondering if there are any Europe-based alternatives.

I dont know of any directly comparable assistants from europe unfortunately. Mistral AI is French and produces a decent LLM, but I was underwhelmed with the software ecosystem. It’s essentially just a chatbot.

For most people, use Open Web UI (along with its many extensions) and the LLM API of your choice. There are hundreds to choose from.

You can run an endpoint for it locally if you have a big GPU, but TBH it’s not great you have at least like 10GB of vram, ideally 20GB.

Hmmm I couldn’t really find anything. The only way to guarantee that is to have models that run purely locally, but until very recently that wasn’t feasible.

Smaller AI models that could run on a phone are now doable, but making them useful requires a lot of dev time and only giant data-guzzling companies have tried so far.